If I ask different LLMs and different models to generate synthetic inflation data, how similar or dissimilar are the results?

Generate synthetic data for inflationThe major difference between the models is the duration; Claude creates 14 years of monthly data, while Grok-3 starts off with only 1 year.

What if I’m more specific?

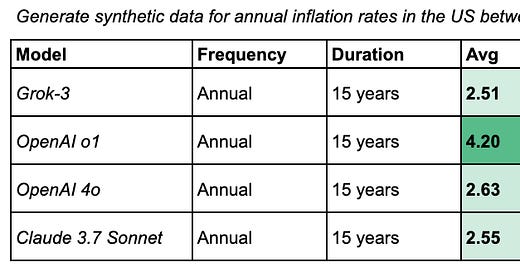

Generate synthetic data for annual inflation rates in the US between 2010 and 2025I’m baffled. Why the heck would o1 produce python code that generates synthetic data that’s so far off the mark from realistic?

It uses a really bad assumption:

# Generate a random inflation rate between 0.0% and 8.0%

inflation_rate = round(random.uniform(0.0, 8.0), 2)When I question o1, it quickly revises its approach.

Finally, what if I ask o1 the same question 4 more times in a row? How consistent is this model from one ask to another?

Two takeaways:

Grok-3, o1, and Claude 3.7 Sonnet - create more realistic synthetic data than ChatGPT4.0. Choose wisely.

When o1 created the data with an average of 4.2% (way off from realistic), it’s a warning. You should always run your prompt at least 2 times.

….

The actual value (from St. Louis FRED) for average annual CPI in the US from 2010-2023 is 2.55%.

Are you just open prompting via assistants or using APIs to directly interact with models?