Everyone tells beginners:

“Use all your features. The more data, the better the model.”

That sounds good. But here’s what actually happens…

I wanted to predict how well I’d run today. Sort of like a readiness score. If the score is low, I can throttle back the workout. High score; dial it up.

To make the prediction, I use # of miles run in the past 7 days, resting heart rate overnight, and…

the exact second I woke up

as predictors.

There are 86,400 seconds in the day. That’s 86,400 possible values.

How many times did I wake up at 6:17:03 AM in my training data?

Once.

The algorithm1 took one look and said:

“Cool. Useless.”

So I bucketed it into hour of the day.

Now we’re talking: wake up between 4–5am? Slower runs.

6–7am? Much better.

Suddenly the algorithm found a pattern.

Not because it worked harder — but because I gave it something to work with.

That’s the thing most people miss.

Not all features help.

Some get quietly ignored.

No warning. No crash. No fuss.

The model just gives up.

A fun way to think of this; ML quiet quitting.

This happens all the time in real work.

Say you’re predicting demand or inventory levels.

You include product_id as a feature.

Feels obvious. You’re predicting something about that product — shouldn’t the model know what it is?

But unless that exact product shows up dozens or hundreds of times in training, the model has nothing to work with.

It doesn’t know A123 is a blue running shoe or a seasonal 12-pack of soda.

To the model, it’s just a one-off code.

So it quietly sets the feature aside.

No error. No complaint. Just quiet quitting.

High Cardinality

In data science, cardinality is the number of unique values in a column.

These are usually high-cardinality, low-density features:

product_id

user_id

exact timestamps

slow velocity SKUs

They’re too specific. They don’t show up enough.

The algorithm can’t generalize, so it stops trying.

“But how can I predict sales without knowing the product?”

You can. You just need to give the model structure, not identity.

Product ID is a name. It has no meaning by itself.

Instead, give the algorithm:

Product category

Price

Pack size or weight

Last 4-week demand

Days since last promotion

Channel or region

These show up across many products.

They create patterns the model can learn from — even for products it hasn’t seen before.

Anonymized similarity beats isolated identity.

Quick gut check: Compression Ratio

A Compression Ratio helps identify features with too many unique values relative to your dataset size.

Here’s a fast way to test if your feature is likely to be abandoned:

Compression Ratio = Unique Values / Total RowsIf it’s near 1.0 → bad news. Model probably ignores it.

If it’s closer to 0.01 → better chance of signal.

Not perfect. But it helps you stop shipping features that don’t belong.

🚨 The SHAP Value Trap

A feature getting ignored actually isn’t the worst case scenario.

Sometimes, an algorithm might hyper-focus on a high-cardinality feature. Then, we’re really toast.

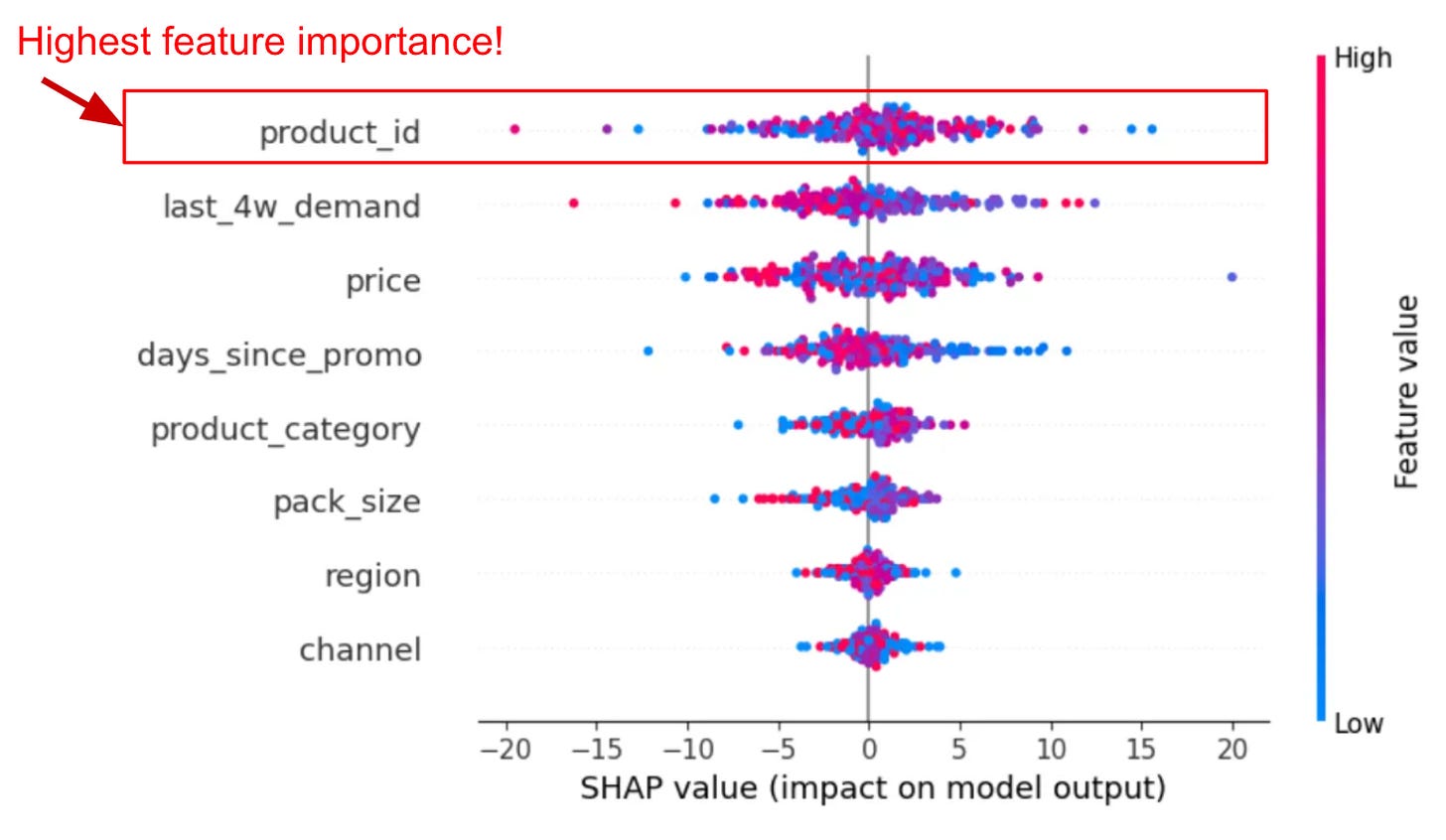

You might include a high-cardinality feature like product_id, train the model, and see it shoot to the top of your SHAP2 chart.

Huge impact.

Top of the list.

Looks important.

But it’s not signal — it’s memorization.

The algorithm isn’t learning patterns. It’s just memorizing values.

Like a student cramming flashcards the night before the test — high performance on known data, total collapse on anything new.

SHAP measures impact on the model's predictions — not whether those predictions generalize.

So if you're including a feature with a high compression ratio — say, 0.9 or above — and you see a high SHAP value, be suspicious.

You’re probably looking at overfitting, not insight. The model won’t work well when you use it to make predictions later.

Compare with last_4w_demand: Also high SHAP values, but capturing actual patterns across many products. One memorizes specific IDs. The other finds relationships that generalize to new products.

High SHAP on a high-cardinality feature is often a red flag.

It's the model saying:

“I couldn’t learn anything general, so I just memorized everything I saw.”

Think Better

This part’s easy to forget:

The algorithm is doing the best it can with what you gave it.

If it can’t find a pattern, it doesn’t fake it.

It doesn’t guess. It just moves on.

And that’s your opportunity.

Because once you see how ML quiet quitting works, you unlock a new way of thinking:

You start designing features the model wants to use.

You stop wasting time chasing noise.

You get better predictions with less complexity.

That’s not just better modeling — it’s better thinking.

I found it important to say algorithm instead of model. The algorithm is finding patterns - it’s modeling the data.

What’s SHAP? It’s a way we measure how impactful features are to making a prediction. In the running example, # of miles may have a strong SHAP value while while the exact second I woke up may have a very low SHAP value.

It's worth noting that another danger of including a high cardinality feature such as product_id is that when new products appear, the model has no semblance of how to handle those values. A very common issue in the retail space...

Great insights, Frank!